Google expects new hardware, software and artificial intelligence technology to significantly improve photography. pixel 7 proAnd CNET has an exclusive deep dive into what the company has done with smartphone cameras.

Thanks to Google’s photography technology, Google positions its $899 flagship Android phone as a direct competitor to Apple’s $1,099 iPhone 14 Pro Max.

Google’s Pixel phones have not sold as well as Samsung’s and Apple’s models. However, they are getting high marks for their photos every year. And if there’s one thing that wins over customers, it’s camera technology.

Last year’s Pixel 6 Pro introduced a “camera bar” that houses the three rear cameras. A 50MP main camera, a 0.7x ultra-wide camera, and a 4x telephoto camera. The Pixel 7 model retains the same 50-megapixel camera and f1.85 aperture, but is housed in a restyled camera bar. The Pixel 7 Pro has the same sensor in the ultrawide as last year, but with a macro mode, f2.2 aperture, autofocus and even wider his 0.5x zoom. The 7 Pro’s telephoto zoom magnification is extended to 5x with an f3.5 aperture, and a new 48-megapixel telephoto sensor enables 10x zoom mode without the use of digital magnification tricks. Both phones have new front-facing selfie cameras.

But the Pixel 7 Pro’s improved hardware foundation is only part of the story.new AI algorithms and Google’s new Tensor G2 processor It speeds up Night Sight, deblurs faces, makes video more stable, and combines data from multiple cameras to improve image quality at intermediate zoom levels such as 3x. This is similar to how traditional cameras work.

Pixel camera hardware lead Alexander Schiffhauer translates the Pixel 7 Pro’s 0.5x to 10x magnification range into traditional 35mm camera terminology: mm camera,” he said. “It’s like the holy grail travel lens.” It’s a versatile setup that photography enthusiasts have long enjoyed for its portability and flexibility.

Here’s a closer look at what Google is up to.

Virtual 2x and 10x cameras on Pixel 7 Pro

High-end smartphones have at least three rear-facing cameras, allowing you to capture wider shots. The ultra-wide camera is good for capturing people packed into a room or interesting buildings. Telephoto cameras are good for portraits and more distant subjects.

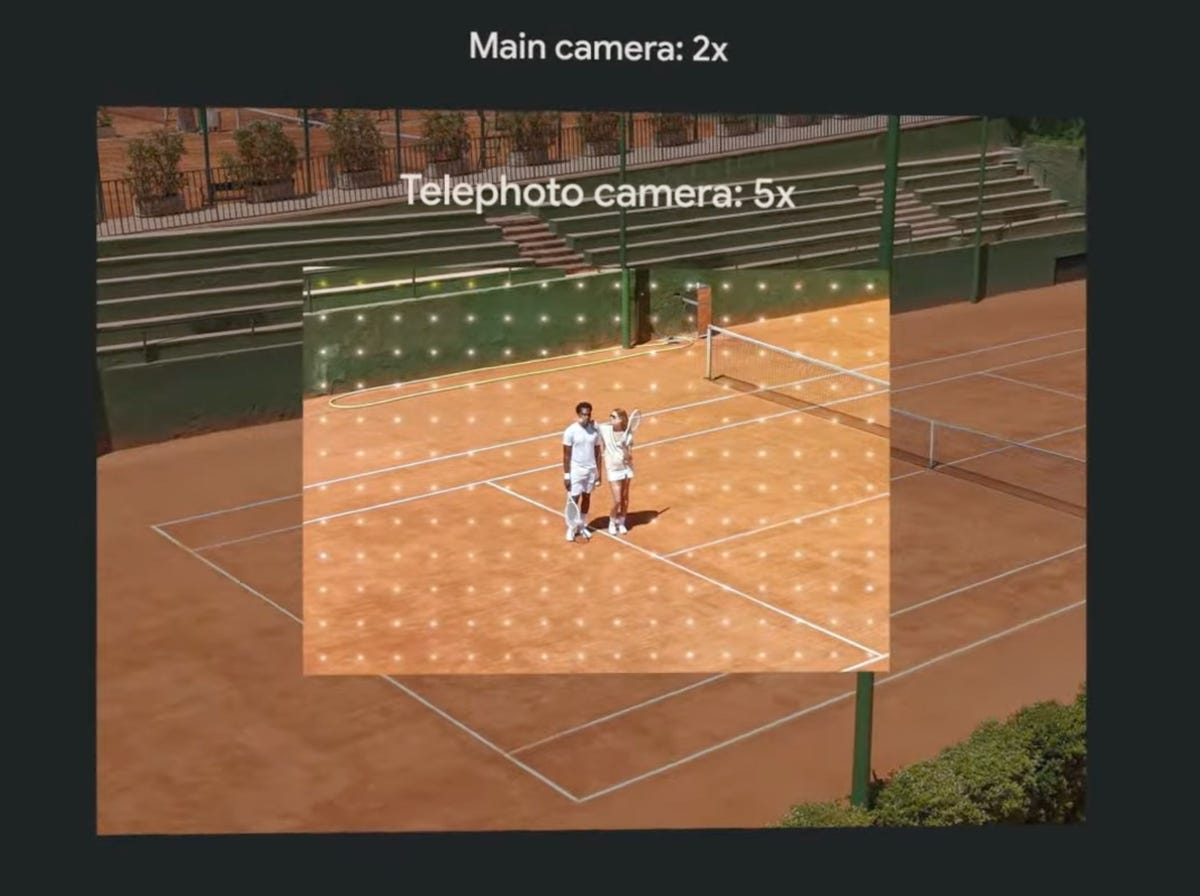

However, large gaps between zoom levels can be problematic. The Pixel 7 Pro’s 50-megapixel main camera and 48-megapixel telephoto camera solve the problem.

These cameras typically use a technique called pixel binning to transform a 2×2 grid of pixels into one large pixel. This results in better colors in 12MP shots and more dynamic range between dark and light tones.

But by skipping pixel binning and using only the central 12 megapixels, you can get a 2x shot with a 1x main camera and a 10x shot with a 5x telephoto camera. Smaller pixels mean that the image quality isn’t as great, but it’s still useful.Google has applied Super Res Zoom technology to improve color and sharpness as well.(2x mode is Google’s Pixel 7 also works.)

Apple took the exact same approach with its iPhone 14 Pro phone, but using only the main 1x camera.

The Pixel 7 Pro’s 1x camera can take 2x zoomed photos at the center pixel, and the 5x camera can take 10x photos.

Google; Screenshot by Stephen Shankland/CNET

Apple has gone even further by allowing you to fully shoot 48 megapixel photos on iPhone 14 Pro1x camera. At 1x, Google always uses pixel binning, so you only capture 12 megapixels.

read more: Pixel 7, Pixel 7 Pro, Pixel Watch: Everything Google Announced

“Zoom Fusion” for more photo flexibility

When shooting at 2.5x to 5x zoom, the Pixel 7 Pro blends image data from the wide and telephoto cameras to improve image quality. Schiffhauer said this improves photos compared to just digitally enlarging photos from the main camera.

But it is difficult. The phone has to coordinate her two camera perspectives. That is, foreground objects block background objects differently. Cover one eye first and then the other and see how the scene changes. The two cameras have different focal lengths and therefore different focus.

To avoid discontinuities, Google uses artificial intelligence, also known as machine learning, and other processing techniques to determine which parts of each image to include or reject.

Zoom fusion is done after other processing methods. They include HDR+, which merges multiple frames into one image for better dynamic range, and AI algorithms that monitor camera shake and take pictures when the camera is at its most stable.

A technique called zoom fusion improves the quality of photos taken between 2.5x and 5x zoom by adding pixels from the 5x camera to the center of the image. AI helps reconcile the two views and reconcile differences.

Google; Screenshot by Stephen Shankland/CNET

Unfortunately, Zoom Fusion is not an option for those who want to take raw photos, an image format with high quality and great editing flexibility.The fixed zoom levels of 0.5x, 1x, 2x, 5x, and Only at 10x can you get the full 12 megapixel raw image.

New stabilization technology in Pixel 7 Pro

Google introduced technology in 2021 to combine data from the main and ultra-wide cameras to combat facial motion blur that can ruin photos. According to Isaac Reynolds, head of Pixel camera software, this face-blurring technique is now being used three times as often.

Specifically, it works more often in normal light, is more active in dim light, and works even on unfocused faces.

For post-processing your photos, the Google Photos app now has a new deblurring tool. It even works with film photos that have been digitized since the days of analog photography.

By the way, if you want to hear more about Reynolds himself, Google’s podcast on Pixel 7 camera tech.

Eye detection and other autofocus upgrades

The Pixel 7 Pro has some autofocus improvements, starting with adding autofocus hardware to the ultra-wide camera. However, for all cameras, Google currently uses AI algorithms to process focus data from the image sensor.

This camera can find eyes as well as faces because of the autofocus found in high-end cameras like Sony, Nikon, and Canon.

New AI technology can also better focus people as they move within the scene. “Even if someone turns away from the camera or walks away, we can keep them focused,” says Reynolds.

The Pixel 7 phone also excels when it’s hard to recognize a face, like a big hat or very big black sunglasses.

And with new AI-based autofocus technology, switching to telephoto shooting is much faster. Activating the telephoto camera often causes the Pixel 6 Pro to pause.

read more: Pixel 7 vs. Pixel 6: A comparison of Google’s flagship phones

better photo upscaling

From 5x to 10x zoom, the Pixel 7 Pro uses the center pixel of the 5x camera to capture 12-megapixel photos.

However, with digital magnification, the camera can zoom from 20x on the Pixel 6 Pro up to 30x. Google has developed a method called Super Res Zoom that uses camera shake to gather more detailed data about your subject and zoom better.

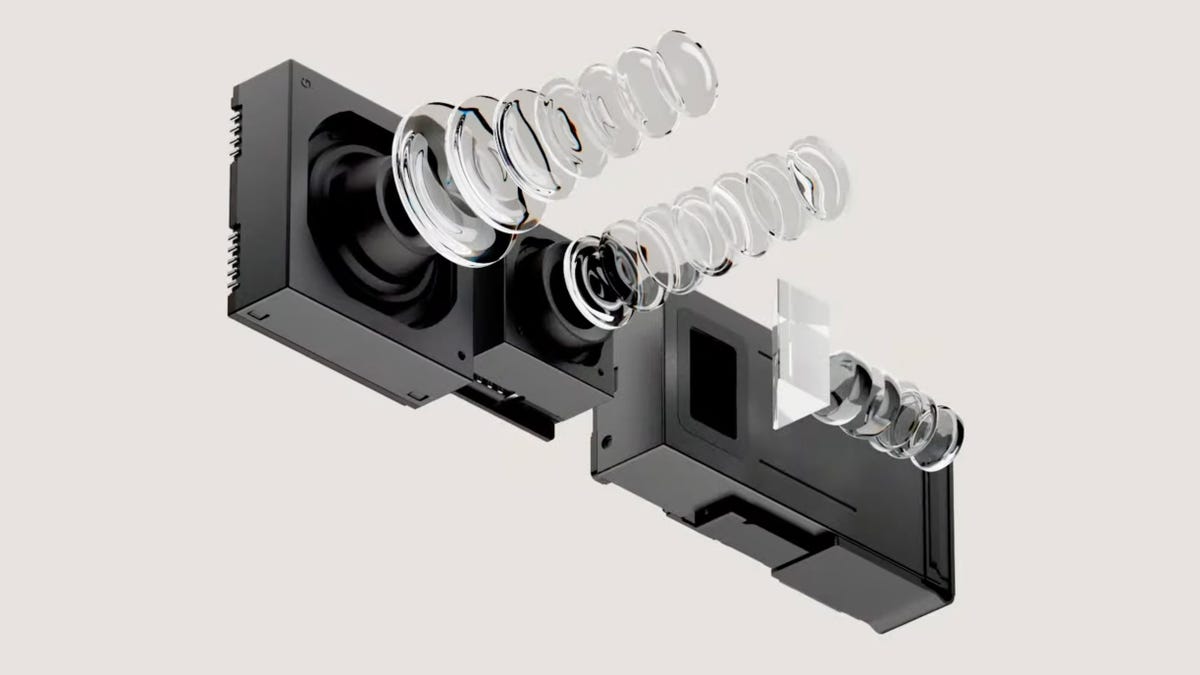

Pixel 7 Pro has three rear cameras. The main 1x camera is equipped with a 50 megapixel sensor and the 0.5x ultra wide angle camera can also capture close-up macro photos. Also his 5x telephoto camera in a reflective “periscope” style. Mounts inside the phone body to accommodate the long optics required.

Google; Screenshot by Stephen Shankland/CNET

Google’s digital zoom can also use AI technology to magnify images. This year, Google trained his AI to better predict new pixels. The phone also calculates a scene attribute called an anisotropy kernel to better predict subtle changes from one pixel to its neighbors and better fill in new data during upscaling.

“Obviously, 30x quality is very different from 10x quality,” says Schiffhauer. “You still get really beautiful photos to share.”

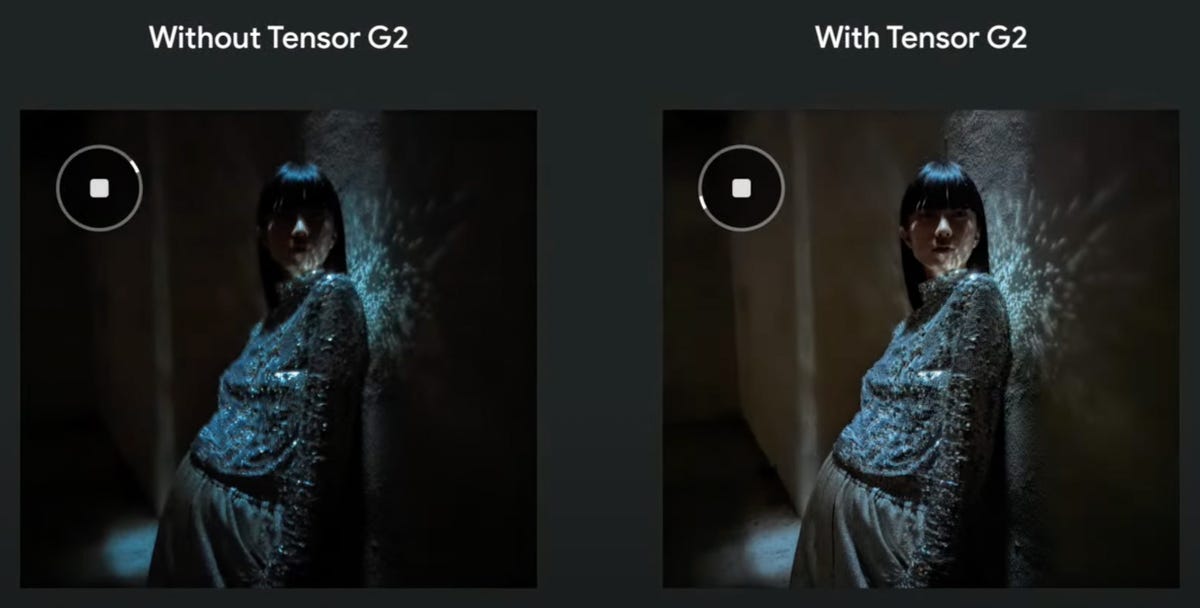

Night mode photo speedup

A pioneering and now widely copied technology for taking better pictures in low light and low light, Night Sight is now twice as fast. This is because Google uses the image frames collected before the user presses the shutter button. (This is possible because the camera continuously collects images and stores them in memory, but only when you actually take a picture.)

“Users are waiting half the time to take night shots,” says Reynolds. “They’re getting sharper results and not penalizing noise.”

Google’s Tensor G2 processor doubles the speed of Night Sight photos on Pixel 7 and Pixel 7 Pro, capturing photo frames faster and reducing noise with more effective AI processing.

Google; Screenshot by Stephen Shankland/CNET

The Pixel line’s Astrophotography mode (an extreme version of Night Sight) now better preserves stars with new AI technology that removes speckles of noise.

Better stabilization and other video improvements

Google also overhauled the Pixel 7’s camera video. This was a weak point compared to the iPhone in the eyes of many reviewers. For starters, all Pixel 7 Pro cameras can now shoot up to 60 frames per second at 4K resolution, but there’s a lot more.

- The camera can shoot in 10-bit HDR (High Dynamic Range) mode, allowing you to capture scenes with higher contrast, such as bright skies and dark shadows.

- Google now adopts 8th generation video stabilization technology. Especially useful for tracking moving subjects at heavy zoom factors.

- Speech enhancement technology can better capture the subject’s voice when shooting with the rear camera.

- Serious videographers can lock white balance, exposure and focus. This was previously only possible with photos.

- Cinematic Blur allows you to artificially blur the background of your video. This is a feature that was previously only available for photos.

- Mimicking the iPhone approach, time lapse videos are now always 15-30 seconds long. Previously, you had to calculate the optimal settings yourself.

- The Pixel 7 Pro can also use a technique called Blur Injection to mitigate occasional shaky videos caused by very fast shutter speeds in bright outdoors.

These improvements show that Google is fighting hard to stay on the cutting edge of smartphone camera technology. “We push hardware, software and machine learning as far as we can,” he said.

Correction, Oct 7: A previous version of this article incorrectly referred to the main 50-megapixel camera on Pixel 7 and 7 Pro smartphones. The camera is the same one used in Pixel 6 models.