The Adobe Experience Platform (AEP) includes various native source connector Data Intrusion into Platforms and Many Natives destination connector Publish data to various marketing destinations, enabled by Real-time Customer Data Platform (RTCDP)However, it’s not uncommon to request data transmission outside of the common marketing activation use cases that the RTCDP feature provides.

Different ways to export data from AEP

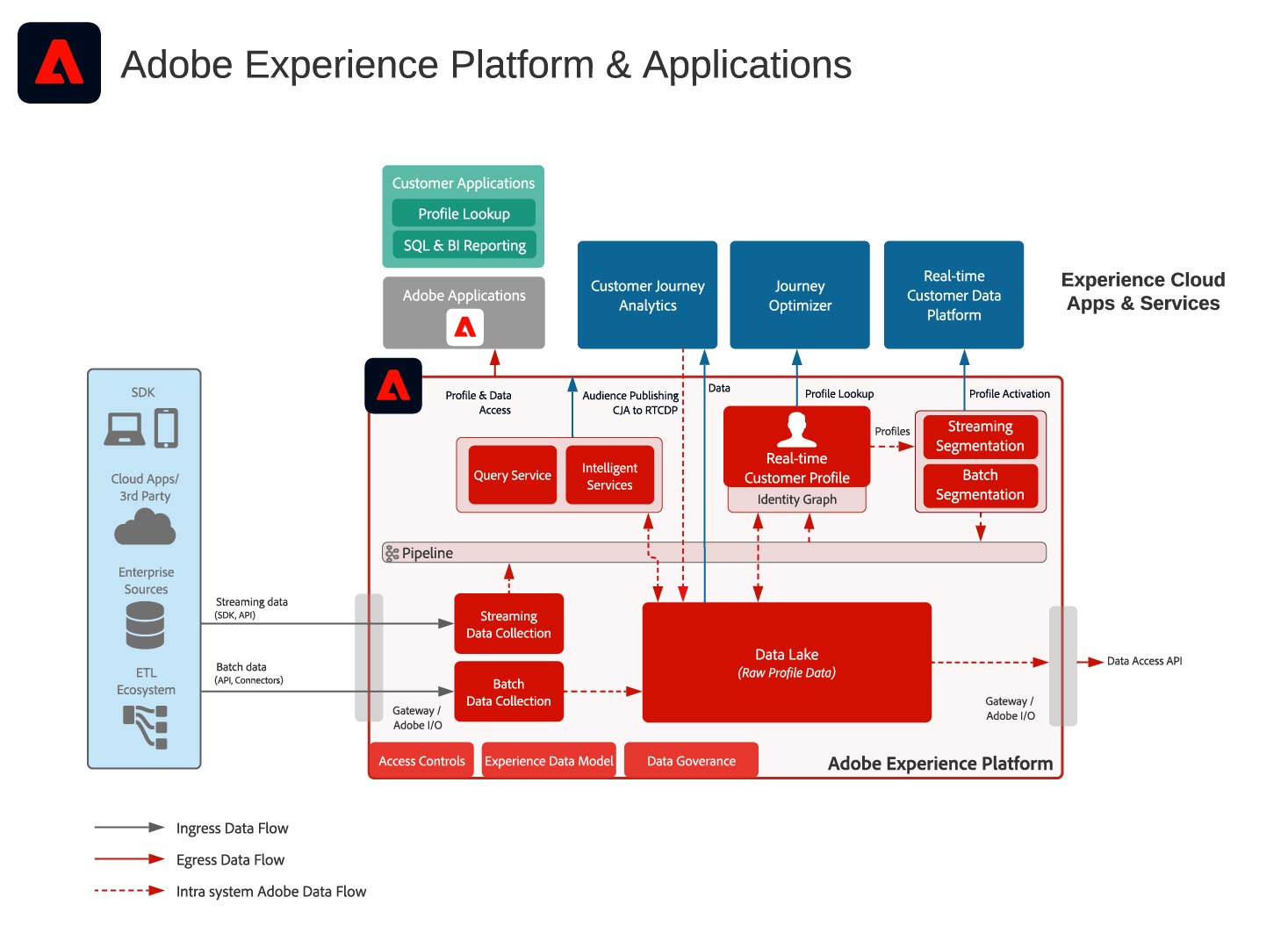

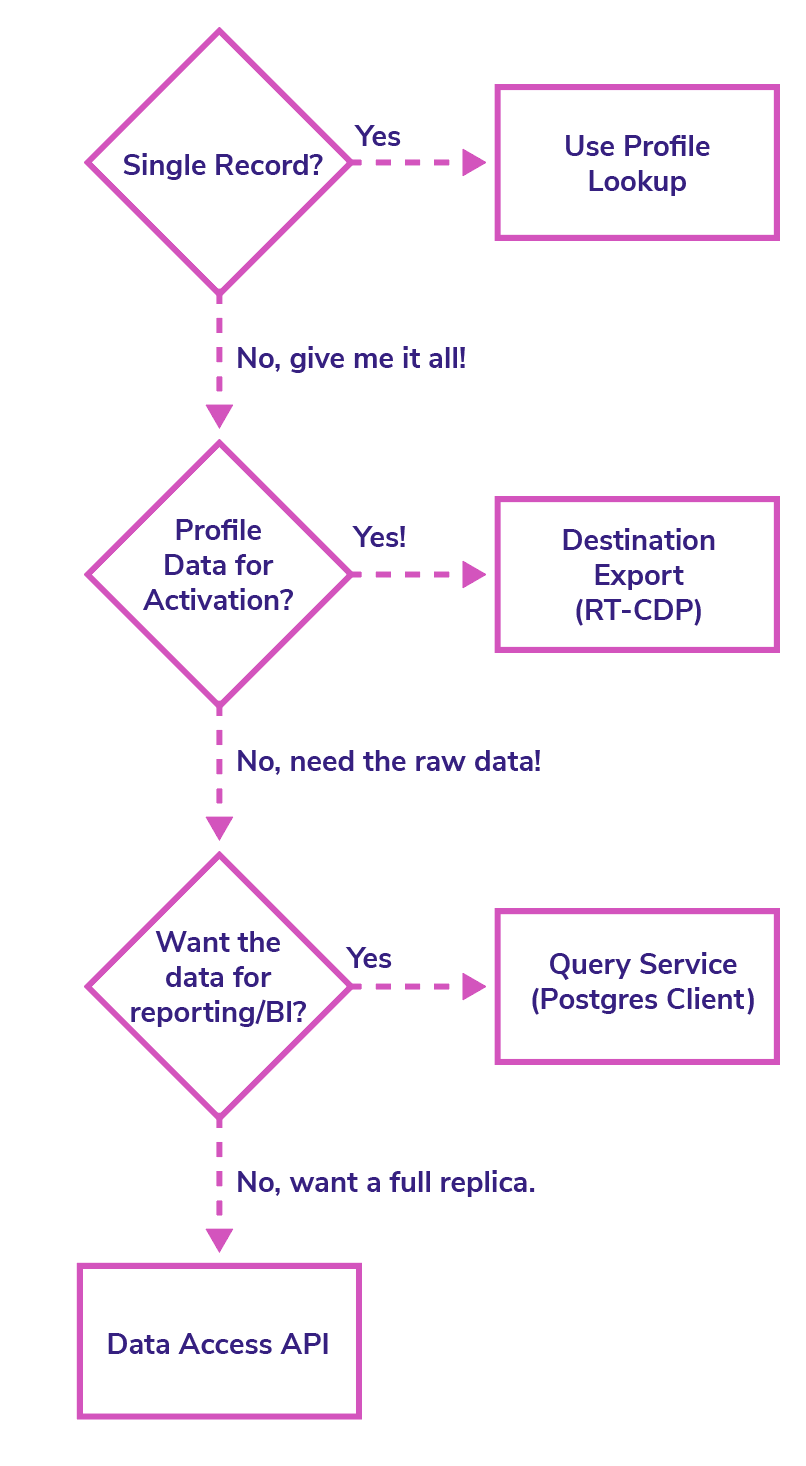

First, let’s look at the platform’s architecture and number the different methods. Extracting data from the platform.

Learn how to create a proof of concept using webhooks and combine it with the Data Access API to create an automated data export pipeline from AEP to Google Cloud Platform (GCP). Personally he wanted to learn more about GCP, so for this tutorial he’s using GCP, but everything here applies to other major cloud providers as well.

Create an automatic export process

1. Create a project in the Adobe Developer Console

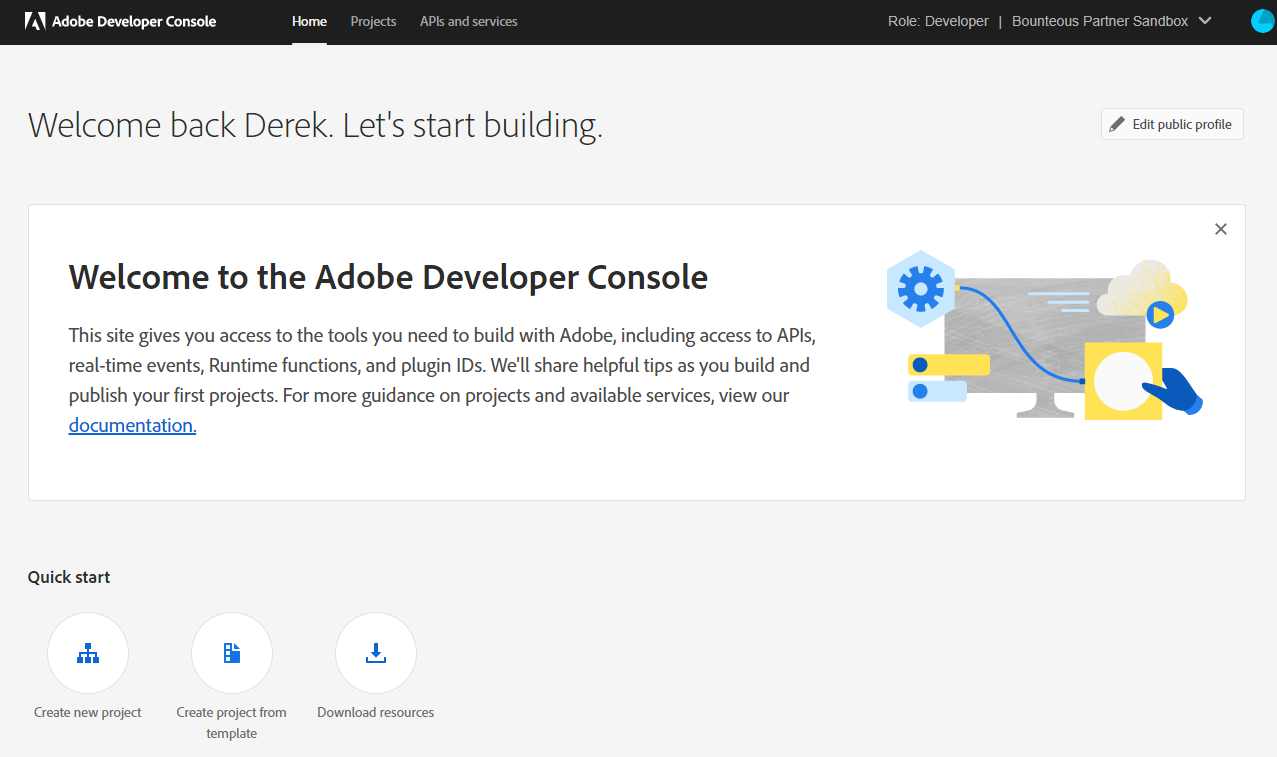

First you need to go to Adobe’s developer consoleIf you don’t already have one, contact your Adobe administrator to grant you developer access for your AEP. If you already have an existing project, feel free to move on.

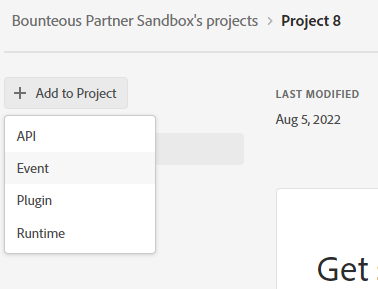

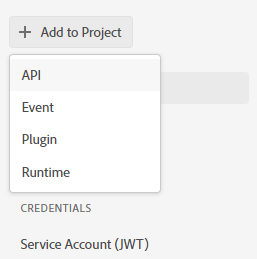

2. Create an API project

3. Webhook events

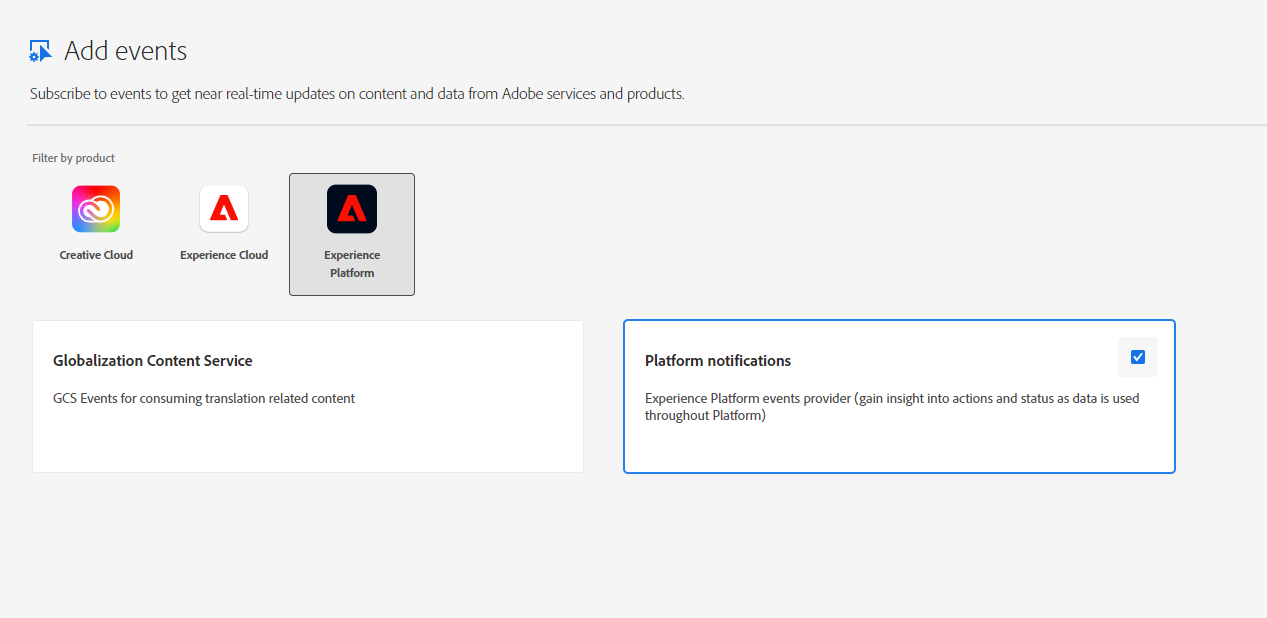

This will “Add Events” Cover it.Then select “Experience Platform” after that “Platform Notifications”.

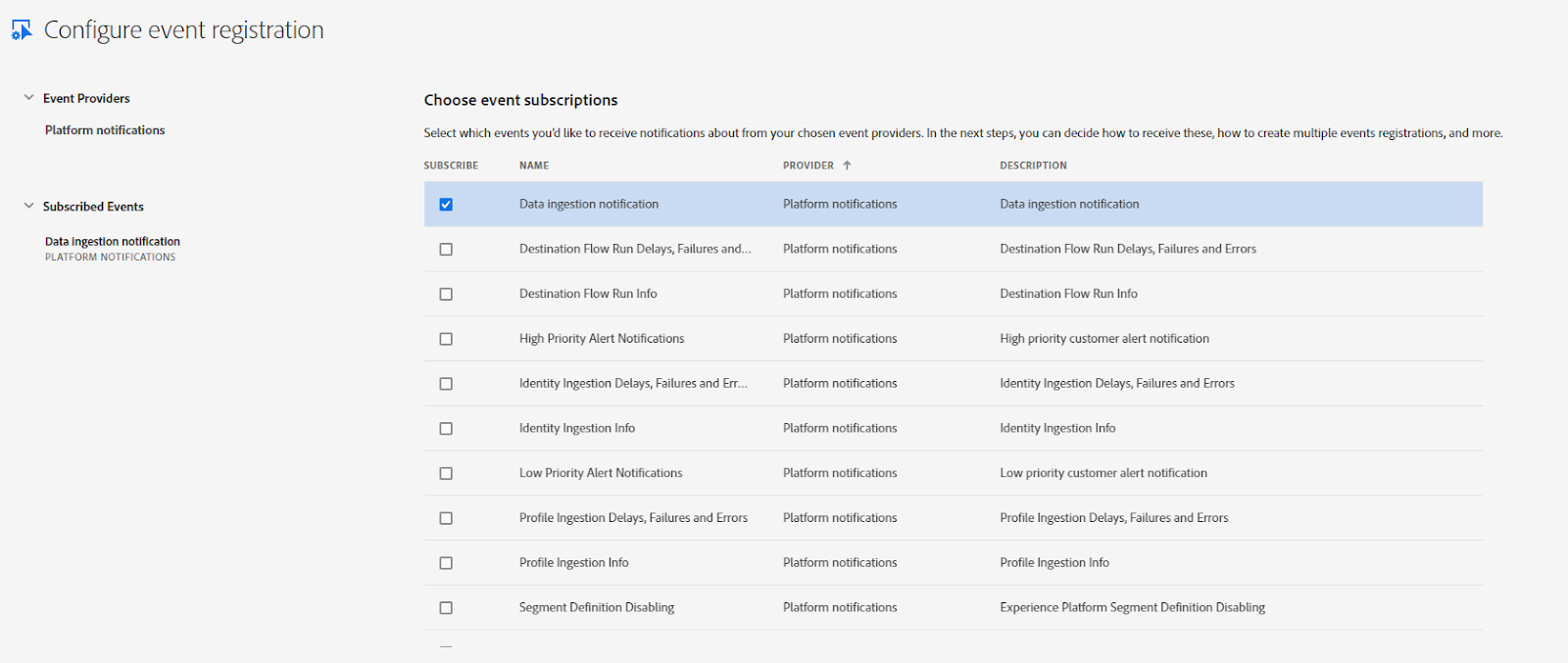

In the next screen there are several different events to subscribe to. For our purposes here, select “Data Ingestion notification”This gives you information about new data ingested into the AEP data lake.

The next screen will ask for the webhook URL. This is optional, but we recommend setting up a webhook via webhook.site so you can see typical webhook payloads. This article Adobe has a great tutorial on setting it up. Enter a dummy URL here and save it if you want to wait until the real webhook for him is created and executed.

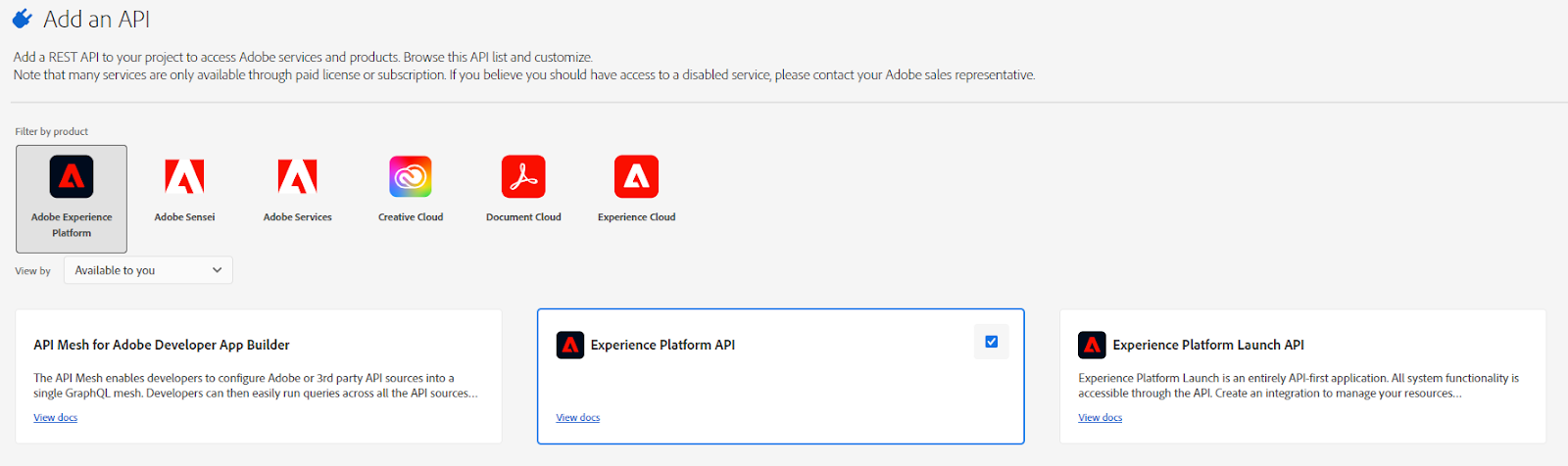

4. Add Experience Platform APIs

Select Adobe Experience Platform in the pop-up, “Experience Platform API”.

The next few screens ask you to select an existing key or upload a new one, then assign this API to the appropriate product profile.Select the appropriate option for your situation and at the end of the workflow[保存]Click. If you generate your credentials, keep them in a safe place as you will need them later.

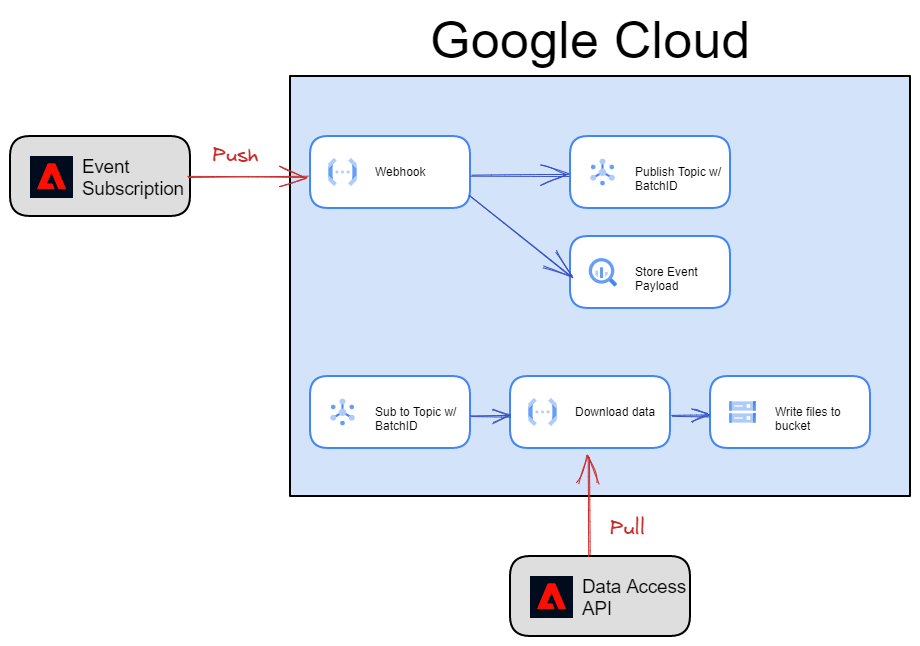

5. Proof of concept for solution architecture

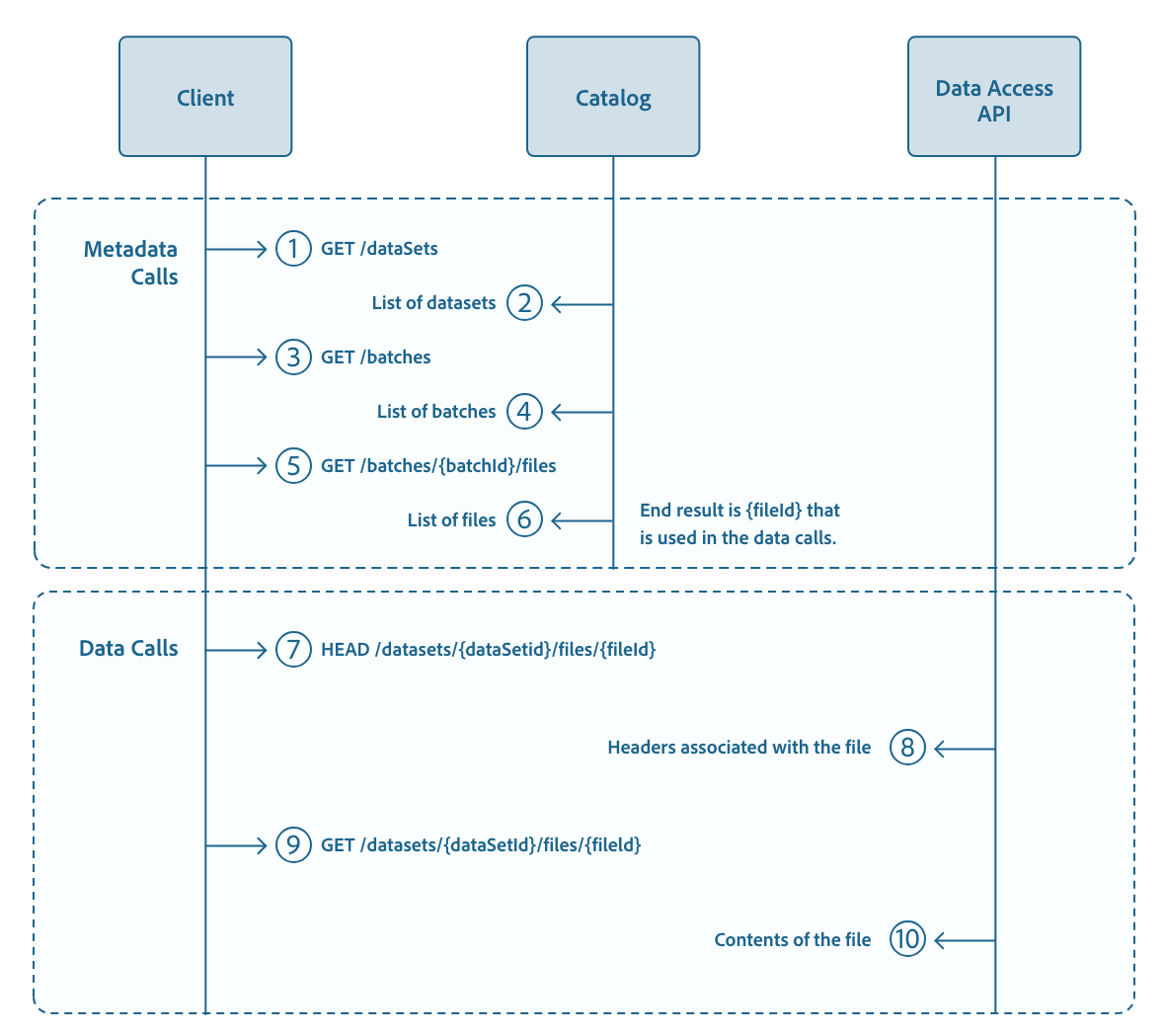

Below is a basic diagram showing what we use on Google Cloud Platform (GCP) for this PoC, starting with using a Google Cloud Function to host the webhook endpoint. This function listens for requests from the Adobe.IO event subscription, writes the payload to a BigQuery table for each request, and publishes an Adobe Batch ID to a Pub/Sub topic.

Then there is a second cloud function that subscribes to the Pub/Sub topic, performs data retrieval from the AEP, and writes the data to a Google Cloud Storage bucket.

This proof of concept is written in Python. Python is my language of choice and all the code can be found in this post. githubI’ve also put all the GCP command line (CLI) for creating gcp resources in the relevant readme file on Github.

As another side note, this PoC chose to use the new Gen2 Cloud Functions, which are still in beta at the time of writing. Remove beta and –gen2 from the CLI command if you want to use the gen1 feature. This article from Google has a good explanation of the differences between versions.

So let’s start with this actual proof of concept.

First, let’s look at a sample event subscription payload –

{

"event_id": "336ea0cb-c179-412c-b355-64a01189bf0a",

"event": {

"xdm:ingestionId": "01GB3ANK6ZA1C0Y13NY39VBNXN",

"xdm:customerIngestionId": "01GB3ANK6ZA1C0Y13NY39VBNXN",

"xdm:imsOrg": "xxx@AdobeOrg",

"xdm:completed": 1661190748771,

"xdm:datasetId": "6303b525863a561c075703c3",

"xdm:eventCode": "ing_load_success",

"xdm:sandboxName": "dev"

},

"recipient_client_id": "ecb122c02c2d44cab6555f016584634b"

}

The most interesting information here is event.xdm:ingestionId. This looks like the AEP’s batch_id. It also has a sandboxname and datasetId, both of which are useful for retrieving data from the data lake. You can find Adobe’s documentation on data ingestion notification payloads. here.

[Optional] Create a BigQuery table

This is optional, but as someone who’s worked with data systems for years, having a simple log table showing what’s been processed can really save you money later. In this case, just do a simple transformation and store the payload of the payload in her BQ.

bq mk \

--table \

mydataset.event_log \

schema.json

*Note* you can find schema.json file of webhooks folder on the Github repository.

6.Webhook function

First, as a quick prerequisite, create a new Pub/Sub topic where your function will publish.

gcloud pubsub topics create aep-webhook

Once it’s created, clone the code from GitHub, Navigate to a subdirectory of your webhooks directory and deploy it as a cloud function.

gcloud beta functions deploy aep-webhook-test \

--gen2 \

--runtime python39 \

--trigger-http \

--entry-point webhook \

--allow-unauthenticated \

--source . \

--set-env-vars BQ_DATASET=webhook,BQ_TABLE=event_log,PUBSUB_TOPIC=aep-webook

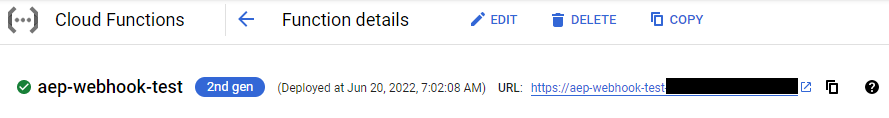

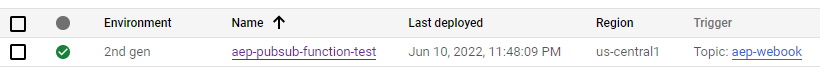

Once the deployment is complete, jump to the GCP console and navigate to Cloud Functions and you will see your new function. aep-webhook-test Deployed. Copy the new URL –

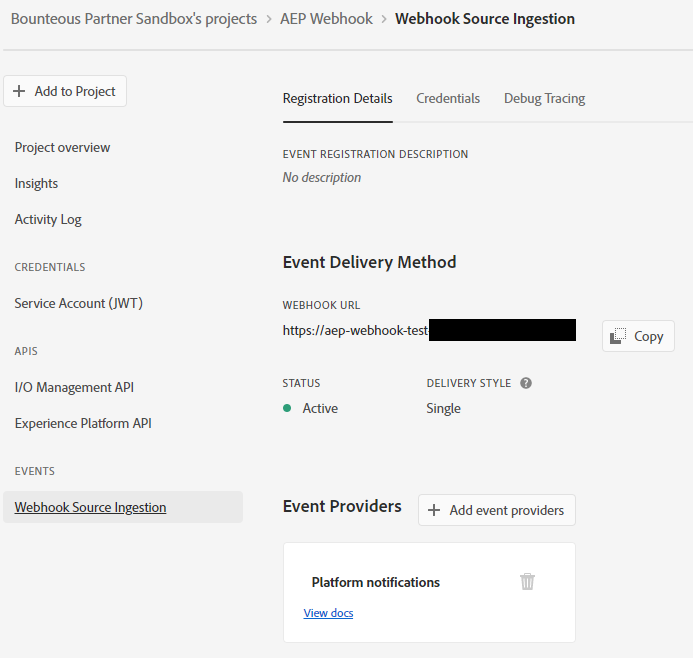

Then go back to the Adobe Developer Console and enter this URL in the Webhook URL.

You should see an immediate request to your new webhook function with a challenge parameter. If everything is deployed correctly, your new function will respond with a challenge response and the Adobe Console will show its status as Active. If not,[Debug Tracing]Starting from the tab, you can see the exact request Adobe sent and the response it received.

7. Data processing function

With the webhook function up and running, let’s move on and deploy the data processing function.

Let’s start by creating a storage bucket to retrieve data from –

gsutil mb gs://[yourname]-aep-webhook-poc

If you cloned the code from Github, change directory to subscribe-download-data, create a credentials folder, and drop the credentials you created earlier in the Adobe Developer Console. Note: This was done for a PoC and we recommend using KMS (Key Management System) to store credentials for real production pipelines.

gcloud beta functions deploy aep-pubsub-function-test \

--gen2 \

--runtime python39 \

--trigger-topic aep-webook \

--entry-point subscribe \

--source . \

--memory=512MB \

--timeout=540 \

--set-env-vars GCS_STORAGE_BUCKET=[yourname]-webhook-poc

If everything went well, you should see your function appear in GCP Cloud Functions after a few minutes.

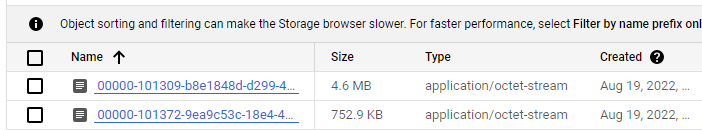

Depending on how busy your AEP environment is, it may take minutes or hours for the data to appear in your storage bucket.

It turns out that all the files are parquet files with somewhat cryptic names. This is the native format stored within the AEP data lake.

After export

You now have a simple pipeline that automatically downloads and stores .parquet files created in your AEP data lake. Obviously, we’ve just scratched the surface of what’s possible with the combination of event registration (webhooks) and data access APIs. Some ideas I had while working on this process –

- Place files in subfolders for each sandbox in your GCS bucket

- Use the API to find the dataset name associated with the parquet file and rename it to a more user-friendly name.

- Add failed ingestion paths to your code to automatically download failed data to another location and send notifications

Exporting data outside of AEP allows you to consider multiple use cases and activations. From this demo you can follow a few clearly outlined steps to complete. I hope this tutorial was informative, easy to understand, and perhaps inspired some new use cases for data activation!