With the introduction of DALL-E, an artificial intelligence-based image generator inspired by artist Salvador Dali, and WALL-E, a lovable robot that uses natural language to generate the mystical and beautiful images the heart desires, the Internet collectively had a pleasant moment. Seeing typed out input like “smiling gopher with his cone of ice cream” instantly comes to life, resonating clearly with the world.

Bringing a smiling gopher and attributes to the screen is no easy feat. The DALL-E 2 uses something called a diffusion model, which tries to encode the entire text into one description of her to generate the image. However, when the text contains many details, it becomes difficult to capture everything in one description. Additionally, while they are very flexible, they can sometimes struggle to understand the organization of certain concepts, such as confusing attributes and relationships between different objects.

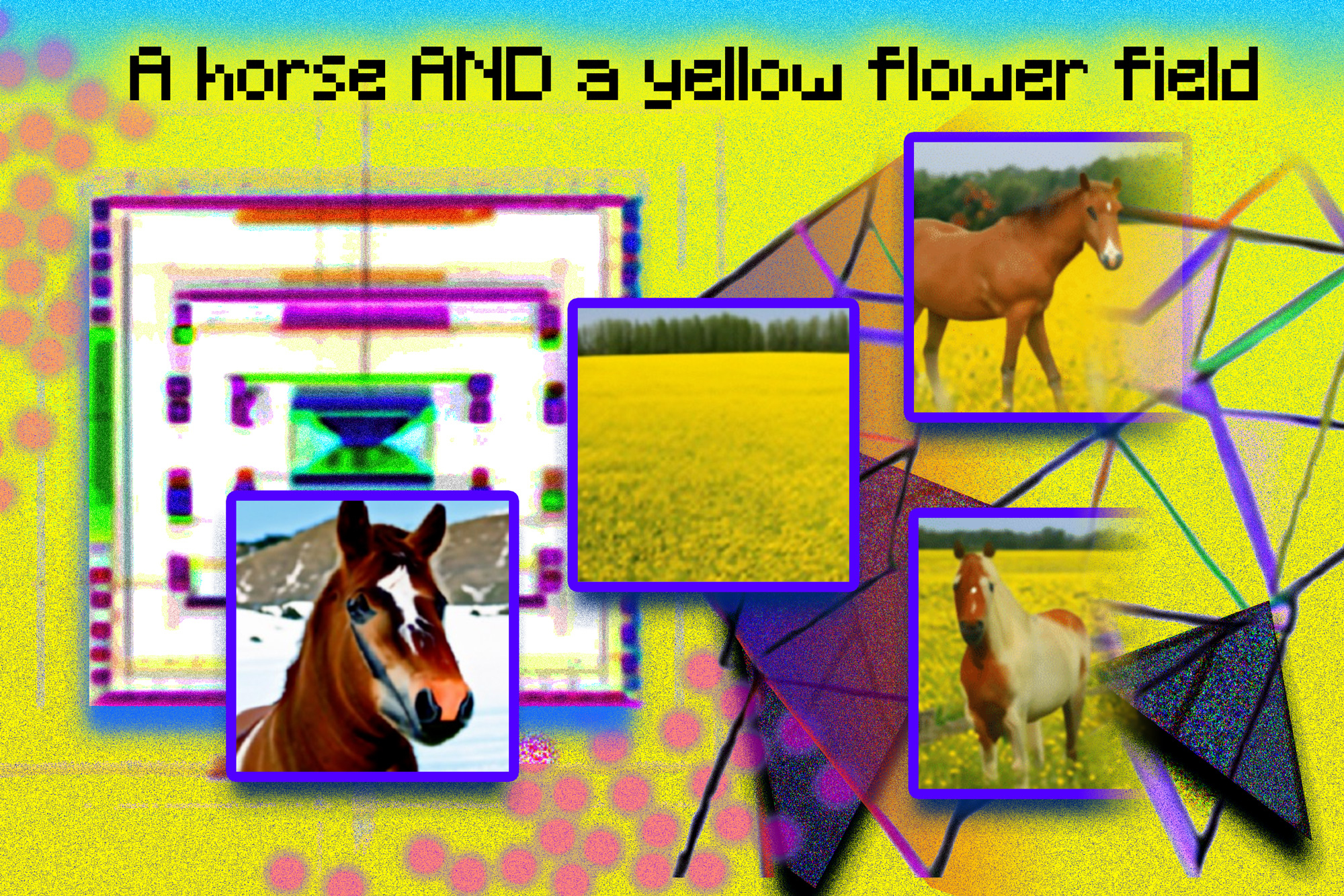

To produce a better and more complex picture, scientists at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) built a different angle on the typical model. A series of models were added together and all worked together to produce the desired image that captured multiple different aspects. As requested by the input text or label. For example, to create an image with two components of her described by two captions, each model would work on a specific component of the image.

The seemingly magical model behind image generation works by suggesting a series of iterative refinement steps to arrive at the desired image. Start with a “bad” image and work your way up to the selected image. By composing multiple models together, you get an image that shows all the attributes of each model as you jointly adjust the appearance at each step. By linking multiple models together, you can get more creative combinations in the resulting images.

For example, consider a red truck and a green house. When these sentences become very complex, the model confuses the concept of a red truck and a green house. A typical generator like the DALL-E 2 might make a green truck and a red house, so swap these colors. The team’s approach can handle this type of attribute-to-object binding. Especially when there are multiple sets, each object can be treated more precisely.

“This model can effectively model the position and relational description of objects, which is difficult with existing image generation models. The DALL-E 2 is great at producing natural images, but it can be difficult to understand object relationships,” says MIT CSAIL PhD student and co-lead author. said Shuang Li of If you want to tell your child to put a cube on top of a sphere, saying this verbally may be difficult for your child to understand. However, our model can generate and display images. “

proud of dali

Configurable Diffusion — Team’s Model — Uses a diffusion model together with a composition operator to combine text descriptions without further training. The team’s approach captures textual details more accurately than the original diffusion model, which directly encodes words as single long sentences. For example, given a “pink sky” and a “blue mountain on the horizon” and “cherry blossoms in front of the mountain”, the team’s model was able to generate that image accurately, whereas the original diffuse model was the sky blue and everything in front of the mountain is pink.

“The fact that our model is composable means that we can learn different parts of the model one by one. You can learn one object and then learn something to the left of another object,” says co-lead author and MIT CSAIL PhD student Yilun Du. “Because we can put these together, we can imagine enabling our system to learn language, relationships or knowledge step by step. This is a very interesting direction for future research.” I think there is.”

Although we demonstrated the ability to generate complex and photorealistic images, the models still faced challenges as they were trained on much smaller datasets than those such as DALL-E2, so we decided to simply There were some objects that could not be captured in .

Now that composable diffusion works on top of generative models such as DALL-E 2, scientists want to explore continuous learning as a potential next step. Given that there is usually more to be added to the subject relation, we wonder whether the diffusion model can start to “learn” without forgetting previously learned knowledge, and whether the model can image with both previous and new knowledge. I want to check if I can reach a place where I can generate a .

“This work proposes a new method for composing concepts in text-to-image generation. We compose them using the negation operator,” says Mark Chen. He is the co-creator of DALL-E 2 and an OpenAI researcher. “This is a great idea to take advantage of the energy-based interpretation of the diffusion model. old idea It can be applied around compositional properties using energy-based models. This approach is also available for guidance without a classifier. It’s amazing how GLIDE can outperform the GLIDE baseline in various synthetic benchmarks and qualitatively produce very different types of image generation. “

“Humans can compose a scene with a variety of elements in myriad ways, a task that is difficult for computers,” says Brian Russell, research scientist at Adobe Systems. “This work proposes an elegant formulation that explicitly composes a set of diffusion models to generate images given complex natural language prompts.”

Along with Li and Du, the paper’s co-lead authors are Nan Liu, an MSc computer science student at the University of Illinois at Urbana-Champaign, and MIT professors Antonio Torralba and Joshua B. Tenenbaum.They will present the work in 2022 European Conference on Computer Vision.

This work was supported by Raytheon BBN Technologies Corp., Mitsubishi Electric Research Laboratory, and DEVCOM Army Research Laboratory.