In deep learning and computer vision, models tackle specific tasks. For example, you can also find models for image classification, object detection and image segmentation. However, few models perform end-to-end visual recognition for both object detection and semantic segmentation.However, one of the most recent arXiv publications, that hybrid net I will work on that issue.Therefore, in this tutorial, we will use a very simple and short Introducing HybridNet with PyTorch.

End-to-end perception in computer vision is a crucial task for autonomous driving. And few deep learning models can do this in real time. One of the reasons may be that it is difficult to clean up. And the new HybridNets model brings novel ways to do this. Later in the tutorial, we briefly discuss the HybridNets model.

For now, let’s review the points covered in this tutorial.

- First, a brief description of the HybridNets model.

- Next, proceed to set up your local system to perform inference using HybridNets. Also this:

- Create a new environment.

- Clone the HybridNets repository.

- Installation of requirements.

- Run inference on some videos.

- Finally, we analyze the results obtained from the HybridNets model.

The above points cover everything we do in our introduction to HybridNets using PyTorch.

A brief introduction to HybridNet

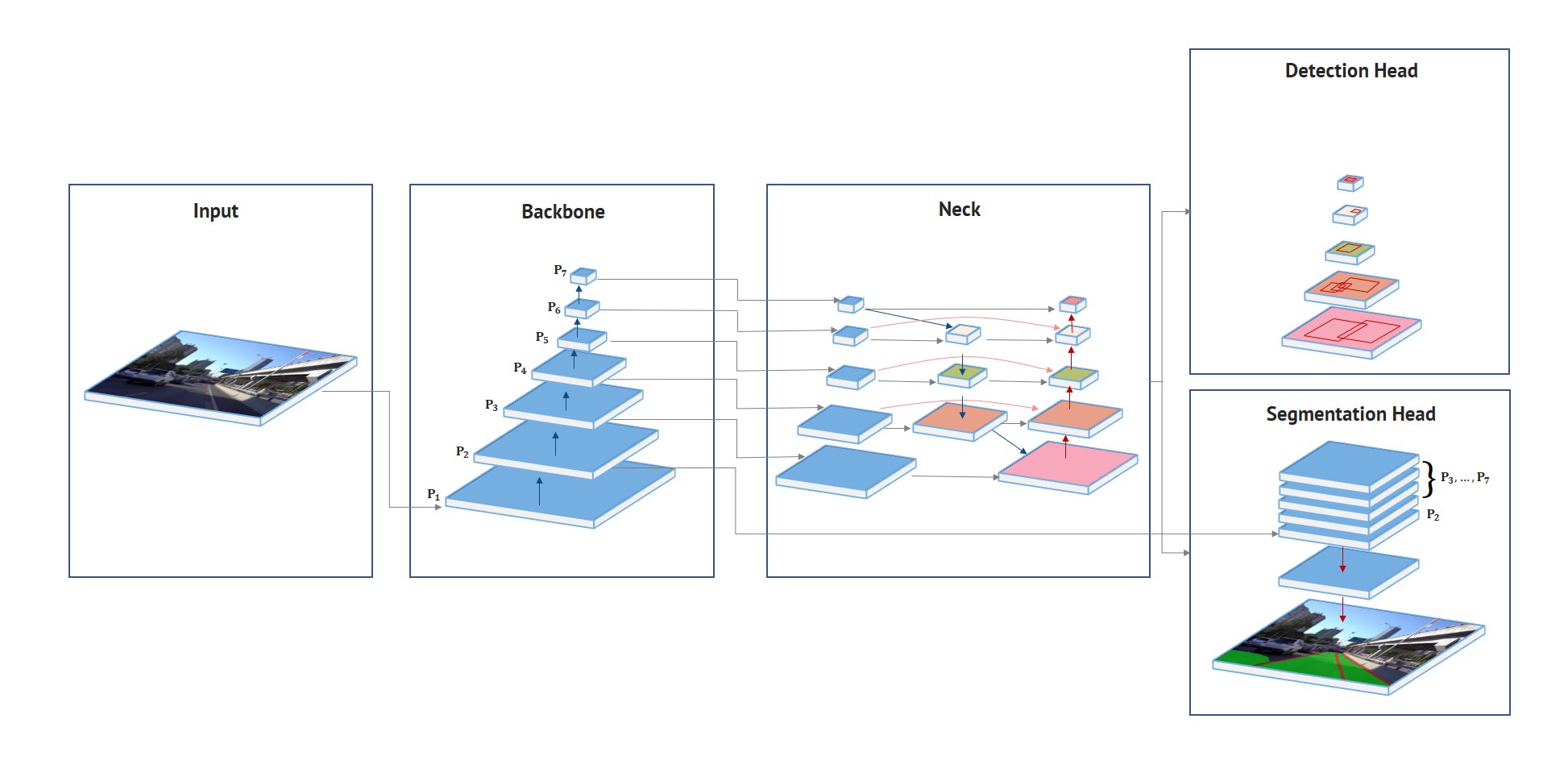

Before we dive into reasoning with PyTorch, let’s briefly introduce HybridNets. We will not discuss implementation details or results here, just a very brief introduction. The overall network architecture will be detailed in another article.

The paper on HybridNets, i.e. HybridNets: End-to-end Perception Networks Published at arXiv by VT Dat et al. It is also a very recent paper, March 2022.

HybirdNets includes the included GitHub repositoryThe repository contains code for training and code for running inference. We’ll talk about this part a little later.

What do HybridNets do?

HybridNets are deep learning models that do both object detection and semantic segmentation. This is an end-to-end visual perception neural network model intended for self-driving solutions.it was trained in Berkeley DeepDrive dataset (BDD100K) dataset.

Currently, the HybridNets model detects all vehicles as follows: car (as per current GitHub code).During training, the author car, bus, truckWhen train class to car class.Although the paper states that they are merged into vehicle class. The class string can change at any time, so it doesn’t really matter.

The authors claim that the proposed HybridNet is capable of multitasking. This includes traffic object detection, drivable area segmentation, and lane detection. The following short clip does a good job of demonstrating the capabilities of the HybridNets neural network model.

Besides that, the authors claim that it outperforms other models on the same task. 77.3 mAP for object detection When 31.6 mIoU for lane detectionIt also runs in real time on the V100 GPU.

The above points alone are enough to get you interested in the HybridNets model. The rest of this article will focus entirely on using HybridNets to perform inference on videos.

directory structure

Before moving on to the technical part, let’s review the directory structure of this project.

├── custom_inference_script

│ └── video_inference.py

├── HybridNets

│ ├── backbone.py

│ ├── demo

│ ├── encoders

│ ├── hubconf.py

│ ├── hybridnets

│ ├── hybridnets_test.py

│ ├── hybridnets_test_videos.py

│ ├── images

│ ├── LICENSE

│ ├── projects

│ ├── requirements.txt

│ ├── train_ddp.py

│ ├── train.py

│ ├── tutorial

│ ├── utils

│ ├── val_ddp.py

│ └── val.py

└── input

└── videos

- of

custom_inference_scriptThe directory contains custom scripts for running inference on videos. It borrows heavily from code the author has already provided in her GitHub repository. You’ll later copy this script into her cloned GitHub repository so you can easily import the module. - of

HybridNetsThe directory is a cloned GitHub repository. - and the

inputThe directory contains two videos that perform inference.

When you download the zip file for this tutorial, you also get the custom inference script and video files.just clone hybrid net repository if you want to run inference locally.

HybridNet using PyTorch for end-to-end detection and segmentation

Above was a brief introduction to HybridNets. Now let’s dive into the inference part using PyTorch.

Before running inference, you need to complete some prerequisites.

download code

Create a new Conda environment

We recommend creating a new environment, as it requires installing the HybridNets repository requirements. Depending on your choice, you can create a new Python virtual environment or a Conda environment. The following block shows sample commands to create and activate a new Conda environment.

conda create -n hybridnets python=3.8

conda activate hybridnets

Clone the repository and install the requirements

You will need to clone the HybridNets repository and install all the requirements. After downloading and extracting the files for this tutorial, be sure to clone the repository into the same directory.

git clone https://github.com/datvuthanh/HybridNets.git

cd HybridNets

Now install the requirements after checking the following: HybridNets Current working directory.

pip install -r requirements.txt

Annotation and visualization require OpenCV as we use a custom script to perform inference on the video. Let’s install it too.

pip install opencv-python

Download pretrained weights

Pretrained weights are required to perform inference. You can download them directly with the following command:

curl --create-dirs -L -o weights/hybridnets.pth https://github.com/datvuthanh/HybridNets/releases/download/v1.0/hybridnets.pth

Before running inference, check the following:

- run all inference commands in the clone

HybridNetsrepository/directory. This will be your working directory. - Our data/videos are back in one folder.

../input/videosdirectory. - Custom inference script (

video_inference.py) from the downloadedcustom_inference_scriptin the cloned directoryHybridNetsdirectory.

Although the author provides a script for video inference (hybridnets_test_videos.py), but still have a custom inference script. So why is that? This is mainly for convenience and some fixes.

- At the time of writing this there seems to be a small problem with the FPS calculation at the end of the script.I have also raised an issue about it you can find hereBy the time you read this this may have been fixed.

- A custom script makes some minor color changes to the visualization and FPS annotations.

Still, the custom script borrows heavily from the original code provided by the author, so all credit goes to the author.

Perform video and image inference on HybridNets using PyTorch

Note: This tutorial does not go into the details of inference scripts. That is, it contains the code that loads the model, pre-processes the video frames, post-processes them, and saves the images/frames to disk. HybridNets and their code will be discussed in more detail in future posts..

All inference results presented here were run on a GTX 1060 6 GB GPU (laptop), i7 8th generation processor, and 16 GB of RAM.

Run inference on images

The author has already provided a script to perform inference on some images present in the file. demo A folder for the cloned repository.first try it with hybridnets_test.py.

python hybridnets_test.py -w weights/hybridnets.pth --source demo/image --output demo_result --imshow True --imwrite True

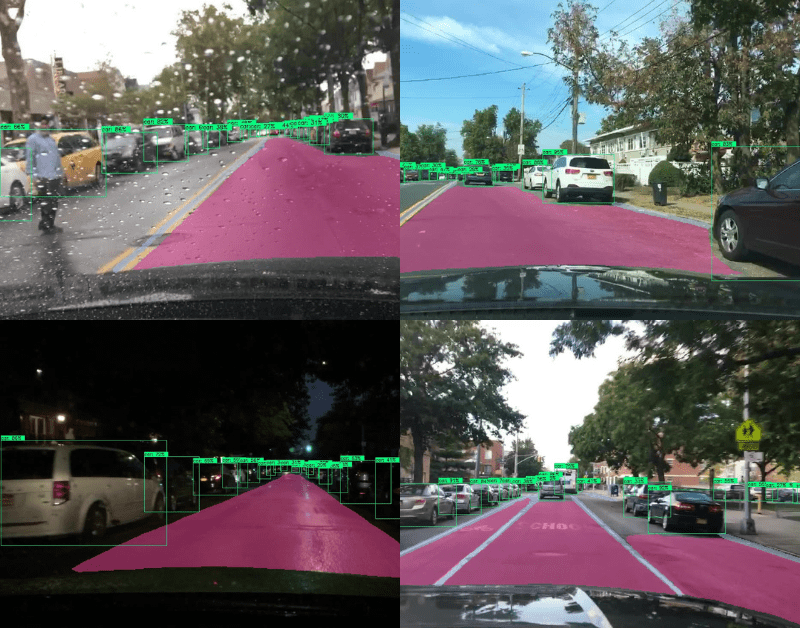

This will display some images on the screen and the result will be demo_results folder. Let’s look at some of the results.

The results actually look pretty good. This model works pretty well even in rainy weather with water droplets on the windshield. Not only that, but the night results and the detection of small vehicles at great distances are also very accurate.

Running Inference on Video

The author is demo Folder for the repository. Let’s use a custom script and run inference on that script first.

python video_inference.py --load_weights weights/hybridnets.pth

By default, the code uses CUDA if available. Once the execution is complete, you will see the following output in your terminal:

DETECTED SEGMENTATION MODE FROM WEIGHT AND PROJECT FILE: multiclass video: demo/video/1.mp4 frame: 297 second: 89.08380365371704 fps: 3.3339393674128055

The FPS displayed on the terminal also includes preprocessing and postprocessing time. So it’s a little low. His FPS for video frames is for forward pass only. Watch the video result.

For the forward pass, the model runs at 11-12 FPS. This is appropriate given the fact that we are running on a laptop GPU and the model is performing both detection and segmentation. Apart from that, the results are also impressive. It can detect and segment almost anything pretty well.

Now let’s point the way to a slightly more difficult video.

python video_inference.py --source ../input/videos/ --load_weights weights/hybridnets.pth

DETECTED SEGMENTATION MODE FROM WEIGHT AND PROJECT FILE: multiclass video: ../input/videos/video_1.mp4 frame: 328 second: 50.41546940803528 fps: 6.505939622327962 video: ../input/videos/video_2.mp4 frame: 166 second: 28.105358123779297 fps: 5.906347084029902

This time, the resolution is not as high as the previous one, resulting in slightly higher FPS throughout the video runtime.

In this video the forward pass time remains the same. Evening environment. Still, the model can segment drivable areas and lane boundaries quite well. However, it incorrectly detects one of the billboards as a car.

This is even more difficult as the model is from Indian roads that have not been trained at all. Here you can clearly see the limitations of the segmentation task. Detection is still very good, aside from it falsely detecting the rearview mirror as a car.

Summary and conclusion

This tutorial gave a very brief introduction to HybridNets and also performed inference using PyTorch. We got to know some of the novelties the model does and how it performs in different scenarios. Future posts will explore HybridNet in more detail. I hope you found this tutorial helpful.

If you have any questions, thoughts or suggestions, leave them in the comments section. I will certainly address them.

you can contact me contact section.you can find me too LinkedInWhen twitter.